Teams of Robots Can Help Us Build 3D Maps of the Ocean

One of the trickiest problems in marine science is figuring out how to map the ocean. If you look at a regular 2D map, with its detailed names and coastal features, you may think that it has long been figured out. But the reality is that despite past and ongoing efforts, we know much less about the topography of the seafloor than we do about the surfaces of the moon, Mars and Venus. So when it comes to the 3D details of the ocean itself—things like the circulation, chemistry and life of the ocean—it is perhaps not surprising that there are many blank spots in our understanding.

Oceanographers are interested in knowing the natural geography of the ocean: where the currents flow, where the water is cooler and hotter, where to find most of the nutrients that will support life. Since different species and processes occur at different depths, oceanographers need to look at the ocean in three dimensions. Unfortunately, collecting the necessary data requires testing different parts of the water with all sorts of different instruments, often in places that are difficult and expensive for humans to reach. And the ocean is moving all the time, meaning the distribution of things like nutrients and temperature can change relatively quickly.

So how do you make a three-dimensional map of a large volume of water, complete with details about temperature, nutrient concentrations, and a plethora of other factors—as quickly as you possibly can?

You use robots. Oceanographers have been using underwater gliders and other autonomous underwater vehicles (AUVs) for a while now. But, increasingly, a new approach is emerging. Don’t just use one robot—use lots of them working together.

A network of robots that can coordinate with each other will be able to do the job much better than just one robot, says Dr. Naomi Ehrich Leonard. Dr. Leonard is a professor of engineering and applied mathematics at Princeton University (and a MacArthur Fellow), and she works extensively with groups of robots, figuring out how they can be programmed to behave like groups of animals that function together in the wild. The same processes that help a school of fish find food, for example, could help a group of robots do scientific work like data collection.

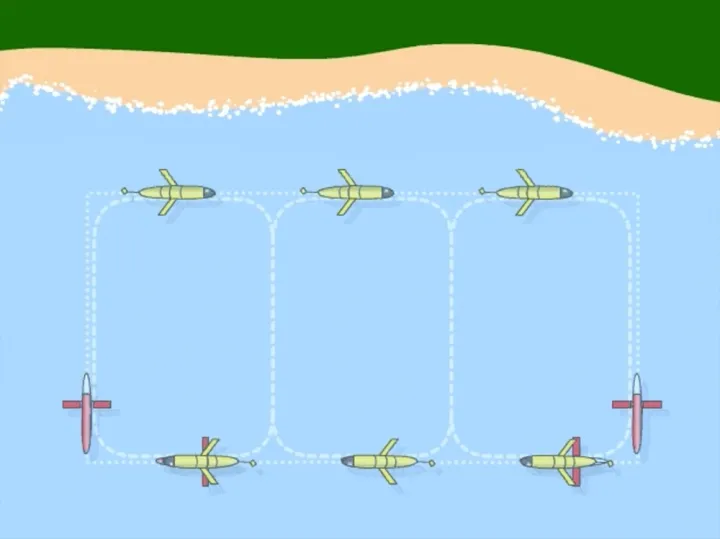

“The network,” says Dr. Leonard, can achieve “much more than the sum of its parts.” One robot, moving around the ocean on its own, is like one sensor. However, “if they’re networked together and they work together,” explains Dr. Leonard, “then what you have is a highly versatile and quite unique sensor array.” The robots can change their formation to measure areas with different shapes, or they can get closer to each other if they need to examine an area in more detail. If you program them correctly, an array of robots can locate a temperature gradient (an area of quickly changing temperature) in the ocean, follow the temperature gradient towards colder water, and move together to map the entire thing—all on their own and in less than a day.

In fact, evidence that an array of robots can do this kind of work well has been around for a while. In August of 2003, a network of three underwater gliders released by Dr. Leonard and her colleagues explored Monterey Bay, California for about twelve hours, collecting data on a temperature gradient. As they moved along horizontal paths through the bay, they also moved up and down, sampling temperatures at different depths. That meant the scientists were able to map the temperature of the bay in three dimensions—which was an essential part of the experiment.

Many oceanographers study upwelling, the process by which cool, nutrient-rich water moves up from the bottom of the ocean to sustain life near the surface. Upwelling is one of the most important phenomena in oceanography, and looking at temperature is a great way to study it. Because the researchers had 3D data on the temperature of the bay, they were able to tell where exactly the cold, nutrient-rich water was going, well below the surface. “That wasn’t previously possible,” Dr. Leonard remarks.

Since then, she and her colleagues have been pushing what’s possible further and further. In August 2006, they programmed ten robots to examine an area of 800 cubic kilometers in northern Monterey Bay, over the course of 24 days. In this experiment, six of the robots coordinated themselves automatically. The coordination enabled the robots to decide where to go and how to move in order to get the best data possible. Then they sent that data straight to the researchers. “We had three different groups running real-time ocean modeling,” recalls Dr. Leonard. “They were predicting temperatures and currents and salinities in the ocean using this data in real time.” Based on the modeling, the researchers could intervene and tell the robots what they wanted them to look at next—humans joining the robot network.

Dr. Leonard’s research has huge implications for oceanographers looking to study anything from upwelling to phytoplankton concentrations to ocean acidification. The Leibniz Institute of Marine Sciences has used a fleet of underwater glider robots to explore physical and biogeochemical features of the ocean around the Cape Verde islands. In the U.S., Oregon State University uses more than a dozen gliders, launched from a coastal observatory, to collect data about the ocean off the Pacific Northwest. NOAA and other agencies are developing a plan for a large-scale Integrated Ocean Observing System (IOOS), which would use underwater glider robots to make 3D observations of the open ocean, coastal waters and Great Lakes. Using underwater glider networks, researchers are able to learn about the ocean faster and in more detail than they could during many previous decades of collecting data from aboard ships. As for what else robot networks could do—“explore the ocean, improve ocean models and predictive skill, monitor biology, track animals, search out wrecks and archaeological sites,” Dr. Leonard suggests—the possibilities are endless.

She herself is moving on to work on ever-more-complicated autonomous robotic networks, exploring different ways for robots to communicate with each other, sense their environments and make decisions together. “I am particularly motivated by important and challenging problems in complex environments like the ocean,” she says, “where what the robotic networks measure, learn, and do can help make the world a better place.”